In a recent project for a Swedish startup, we’ve taken a leap forward in optimizing cloud storage solutions largely due to the utilization of Athena partitioning. In this article, I will describe how to use the same idea in the context of S3 access logs.

Amazon S3 server access logging now supports automatic date-based partitioning for log delivery. This allows services like Amazon Athena to improve performance and reduce cost when querying logs.

How to enable log delivery with date-based partitioning?

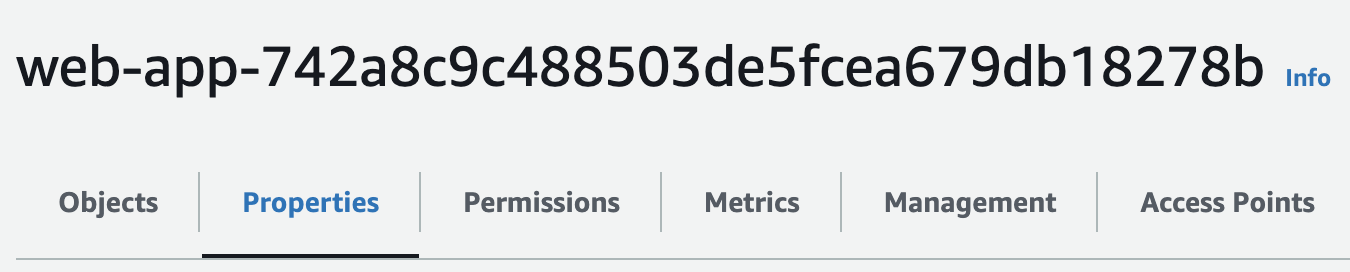

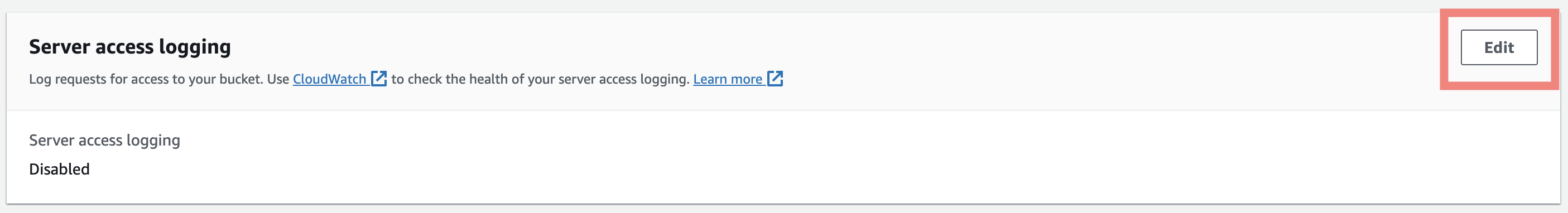

- Go to the Properties tab of the source bucket:

- Find the section for server access logging:

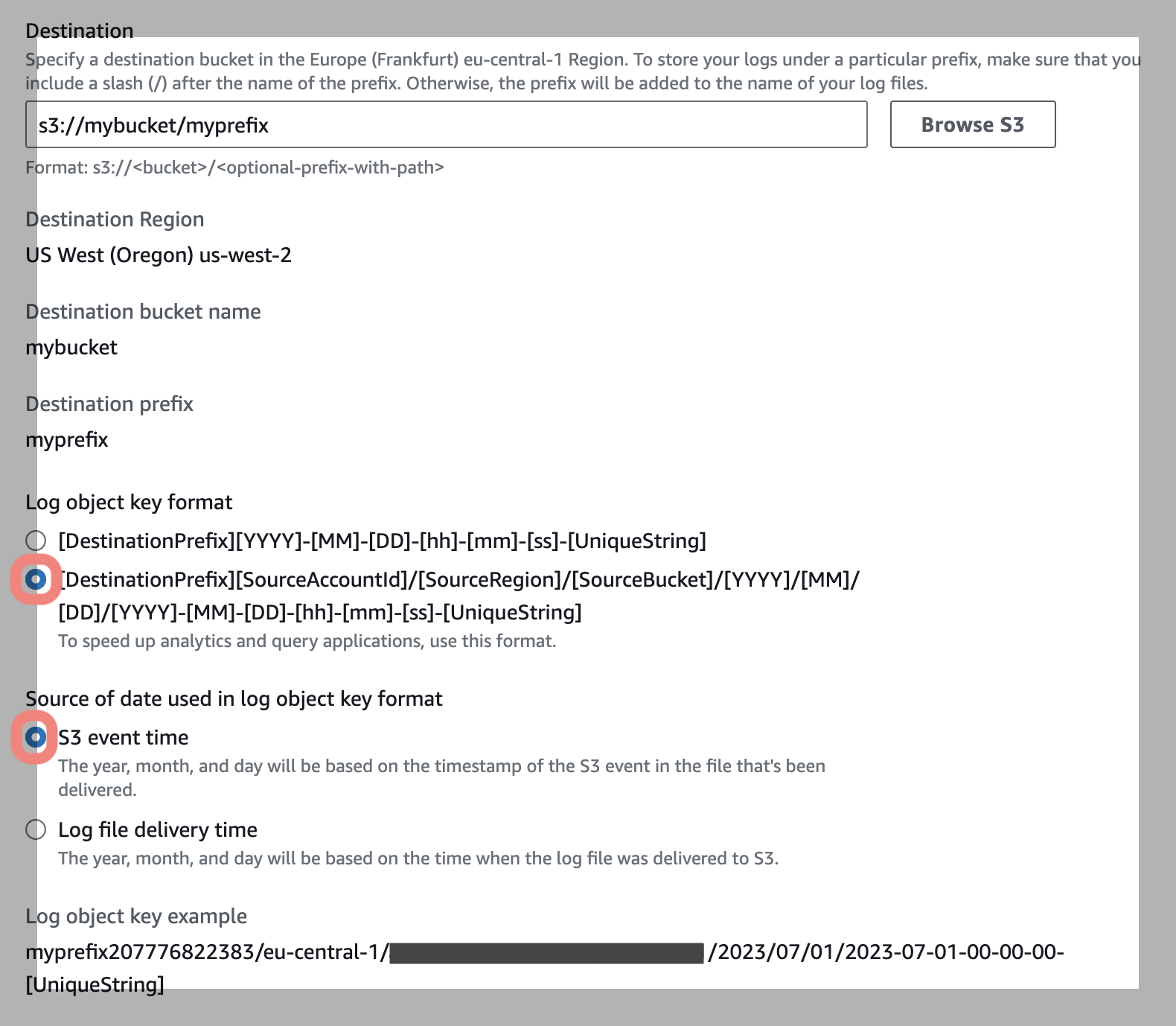

- Specify the target bucket and prefix where you want to store the logs and set log object key format to the second option.

You can find more details here: How do I enable log delivery?

How to query partitioned s3 access logs using Athena?

I’ve created terraform module that creates an AWS Glue table designed specifically to query Amazon S3 server access logs in the new date-based partitioning format. Simply specify the bucket name and prefix where the logs are stored and the module will create a table:

module "s3_access_logs_glue_table" {

source = "fivexl/s3-access-logs-athena-table/aws"

name = "s3_access_logs"

database_name = aws_glue_catalog_database.s3_access_logs_db.name

location = "s3://my-logs-bucket/my-logs-prefix"

}

After that you can query the table using Athena:

SELECT *

FROM

s3_access_logs

WHERE

accountid = '111111111111'

and bucket = 'my-bucket' // name of the bucket for which you enabled logging

and region = 'eu-central-1' // region where the bucket is located

and year = '2023'

and month = '11'

and day = '25'

This way Athena will only scan the logs for the specified date and will not incur any additional cost for scanning the entire log bucket (as it happens by default).

If you have any questions or suggestions, feel free to contact me on LinkedIn, or Twitter

See also in S3, Athena, logs, partitioning and AWS